Simplifying FastAPI Testing: A Practical Approach

Why it matters

Proper test configuration prevents accidental production data manipulation and ensures accurate integration testing.

The big picture

FastAPI offers robust testing tools, but setting up test environments can be tricky. Here’s a streamlined approach using .env files and a custom app factory.

Why should I care about all this?

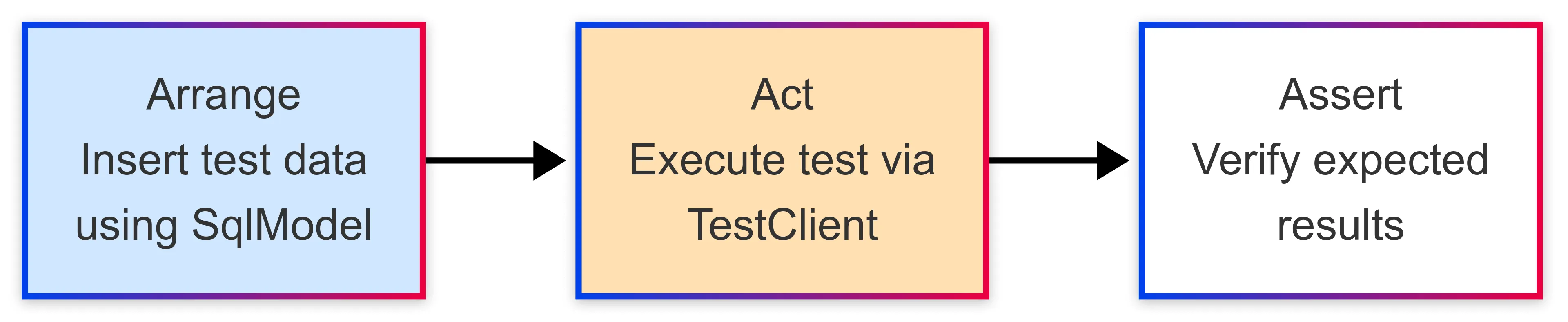

First, you likely don’t want to run integration tests against your production configuration even if you have modified your local .env file by mistake. Second, in the Arrange-Act-Assert pattern, you may need to insert data in the ‘Arrage’ stage, which would then not be aligned correctly when you execute the integration test via the TestClient.

FastAPI includes testing tools and patterns for writing unit and integration tests. A great tool for integration testing is TestClient provided by FastAPI. See FastAPI Testing. TestClient wraps the httpx package. This TestClient can be used to make HTTP requests to the application. See TestClient.

Additionally, FastAPI recommends using Pydantic for Settings configuration. See FastAPI Settings. As Pydantic understands and uses python-dotenv, we can use the same approach to create a Settings class for our tests.

My preference is to use .env files for configuration and environment variables. It is a good practice to store configuration in a .env file following the 12-factor approach.

However, there are some drawbacks to overcome for practical adoption:

- The recommended approach is to use

app.dependency_overridesto override the settings class instance especially when also using Dependency Injection dependency_overrides.- However, I have found this complicated and sometimes flakey.

- By default, FastAPI will pick up your

.envfile from the root of your project and won’t load anything like a.env.testfile. - You will need to organize you code in a way that the main FastAPI application is not instantiated when running tests.

- This is especially important if you are caching your settings with the

@lru_cache()decorator.

- This is especially important if you are caching your settings with the

Key improvements:

- Separate test configuration: Use a

.env.testfile to isolate test settings. - App factory pattern: Create your FastAPI app on-demand for better test control.

- Simplified dependency overrides: Avoid complex overrides by loading test settings early.

How it works

I have a repo for the full example: https://github.com/getmarkus/fastapi-test-settings.

-

Ensure you have a

.env.testfile in the root of your project#.env APP_NAME="Awesome API"#.env.testing APP_NAME="Test App" -

Include a

conftest.pywithpython-dotenvconfigureddotenv_path = Path(".env.testing") load_dotenv(dotenv_path=dotenv_path, override=True) settings = get_settings() -

Extract the creation of the FastAPI app to a function

from fastapi import FastAPI from .config import get_settings def create_app() -> FastAPI: settings = get_settings() app = FastAPI() @app.get("/") async def read_main(): return {"msg": settings.app_name} return app -

From your

main.pyfile, importcreate_appand call itfrom .factory import create_app app = create_app() -

In your

conftest.pyfile, setup an app fixture@pytest.fixture(name="app") def test_app(): """Create test app instance only during test execution.""" return create_app() -

Create a test that uses the app fixture

from fastapi.testclient import TestClient from .conftest import settings def test_read_main(client: TestClient): response = client.get("/") assert response.status_code == 200 assert response.json() == {"msg": "Test App"} assert response.json() == {"msg": settings.app_name}